Multi-node Elixir Deployments on Linux

While I was creating DiscoBoard, a Discord soundboard bot, I spent a lot of time determining how I wanted to handle deployment. DiscoBoard consists of two services: the LiveView website and the Discord bot itself. In order to guarantee a responsive interface for most users, I needed to deploy the website across several regions. This post goes over the technology I used to do so without resorting to services like Fly.io or Kubernetes and the cost those incur.

tl;dr

Ansible + libcluster + Phoenix PubSub + ZeroTier + Caddy = ❤️

The Hardware

DiscoBoard consists of 3 Digital Ocean Droplets and a dedicated server, all running Debian. The droplets each run a node for the website, while the dedicated server runs the bot itself.

Even though I have used Digital Ocean (DO) in the past, I still looked into other Cloud Providers and they were more expensive. Azure and AWS require a multi-year commitment to get cheaper than DO and Fly.io just gives less resources per dollar than DO.

I use a more powerful dedicated server to run the bot since it handles audio transcoding and renting a dedicated server provides a whole lot more power than the cloud can give you for the same price.

Deployment

I spent a good amount of time researching Kubernetes and its offshoots like k3s and decided that was still a whole pile of complexity that provides no benefits for me and would add additional cost to the project. Then I found Ansible and more importantly: Ansible for DevOps. Ansible automates actions such as copying files, updates, or service management.

Ansible handles the entirety of the deployment and administration of the servers for DiscoBoard. It does the initial setup required for the servers (ssh keys, firewalls, updates, useful utilities) as well as the releases. After I've created a new release I run two Ansible playbooks to deploy the services. It manages the service, uploads and replaces the files, runs any updates, and writes node-specific configuration values.

By using Ansible to manage our deployment instead of Kubernetes, I save at minimum $70 per month. Kubernetes across multiple regions gets expensive quickly.

Almost Continuous Deployment

Ansible handles the deployment of releases, but how do I get those releases? I use CircleCI to create them. I have a pipeline that runs compiles both services as Elixir Releases. After both have successfully compiled, it uploads the releases to GitHub using ghr and tags a new release.

When I run the Ansible playbooks for deployment, they upload the fetch utility to the servers. Fetch downloads the latest release out of my private GitHub repo.

After downloading the release, Ansible unzips it, stops the services, replaces the files, and starts the service back up. The total downtime is less than a second.

Networking

With the bot and the website being separate services across multiple machines, I use Phoenix PubSub to facilitate communication with them. It works seamlessly across Distributed Erlang nodes, so I use the wonderful libcluster library to automatically form the cluster, specifically using the gossip strategy.

Important note: Make sure that you have a secure cookie if you plan on using Distributed Erlang in production, there are bots that scan the internet looking for unsecured nodes. I recommend you don't even expose it to the internet, and have your nodes communicate over a VPN.

I personally use ZeroTier for my servers to communicate with each other. There is an excellent post on how to use Tailscale for the same purpose here, and you can always roll your own using Wireguard.

Part of my Ansible playbooks are a set of tasks that set the erlang hostname in the service file to give the nodes recognizable names within the cluster:

- name: Get ZT IP

shell: "ip a s <interface> | egrep -o 'inet [0-9]{1,3}\\.[0-9]{1,3}\\.[0-9]{1,3}\\.[0-9]{1,3}' | cut -d' ' -f2"

register: zt_ip

- name: Set the node name.

lineinfile:

dest: /path/to/service/file/file.service

regexp: "^#Environment=RELEASE_NODE=MODIFY"

line: "Environment=RELEASE_NODE={{ ansible_hostname }}_site@{{ zt_ip.stdout }}"

state: presentAnd if you need to connect to your cluster to do some remote debugging, it is as simple as joining the virtual network and connecting.

DNS

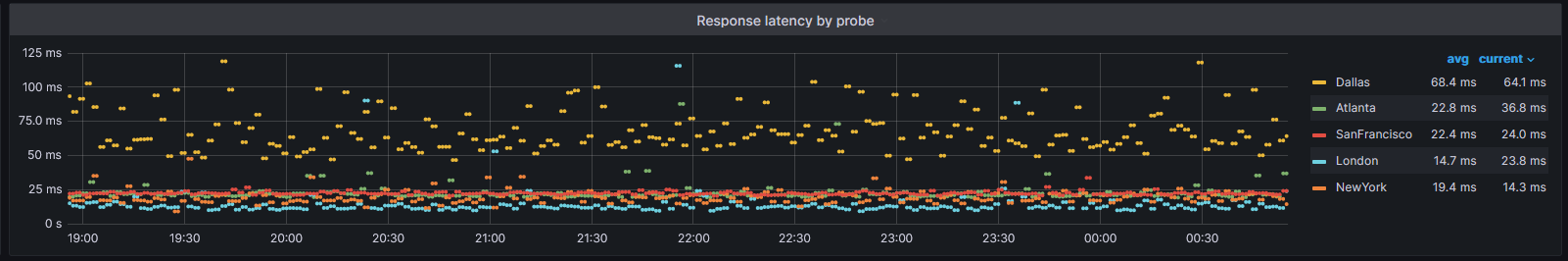

All of this is great and all, but how do your users make sure they hit the closest server? I found that the cheapest way to do this was using Azure Traffic Manager's Geo Routing . This costs me $2 a month compared to the $15+ Cloudflare's load balancing would have cost (and that would increase each time I added an additional node).

SSL

Surprisingly, this was one of the harder problems to solve without spending any money. I normally use Caddy and its easy LetsEncrypt integration, but how does this work with multiple servers requesting the same certificate? I did not want to have to pay extra money just for an SSL certificate of course.

After quite a bit of research, I learned of the DNS-01 challange type. Since I was already using Azure for DNS, I chose to use the dns.providers.azure module in Caddy. This enables Caddy to automate these DNS challanges and share them for all of the web servers. It does require using a customized Caddy build, so I have Ansible upload the executable and systemd file as part of the update process.

An alternative to DNS is to use caddy.storage.s3, where Caddy uploads the challenge to an S3-compatible service and your other Caddy servers will check for the challenge in there if they receive the request.

Closing Thoughts

I hope this was helpful. This is how I manage a multi-node system while reducing complexity (no k8s) and saving money. Feel free to reach out to me on the Fediverse or my Reddit!